Most AI apps don't ship. Permissions are the problem.

While LLMs promise to make your apps smarter, they introduce new problems for authorization:

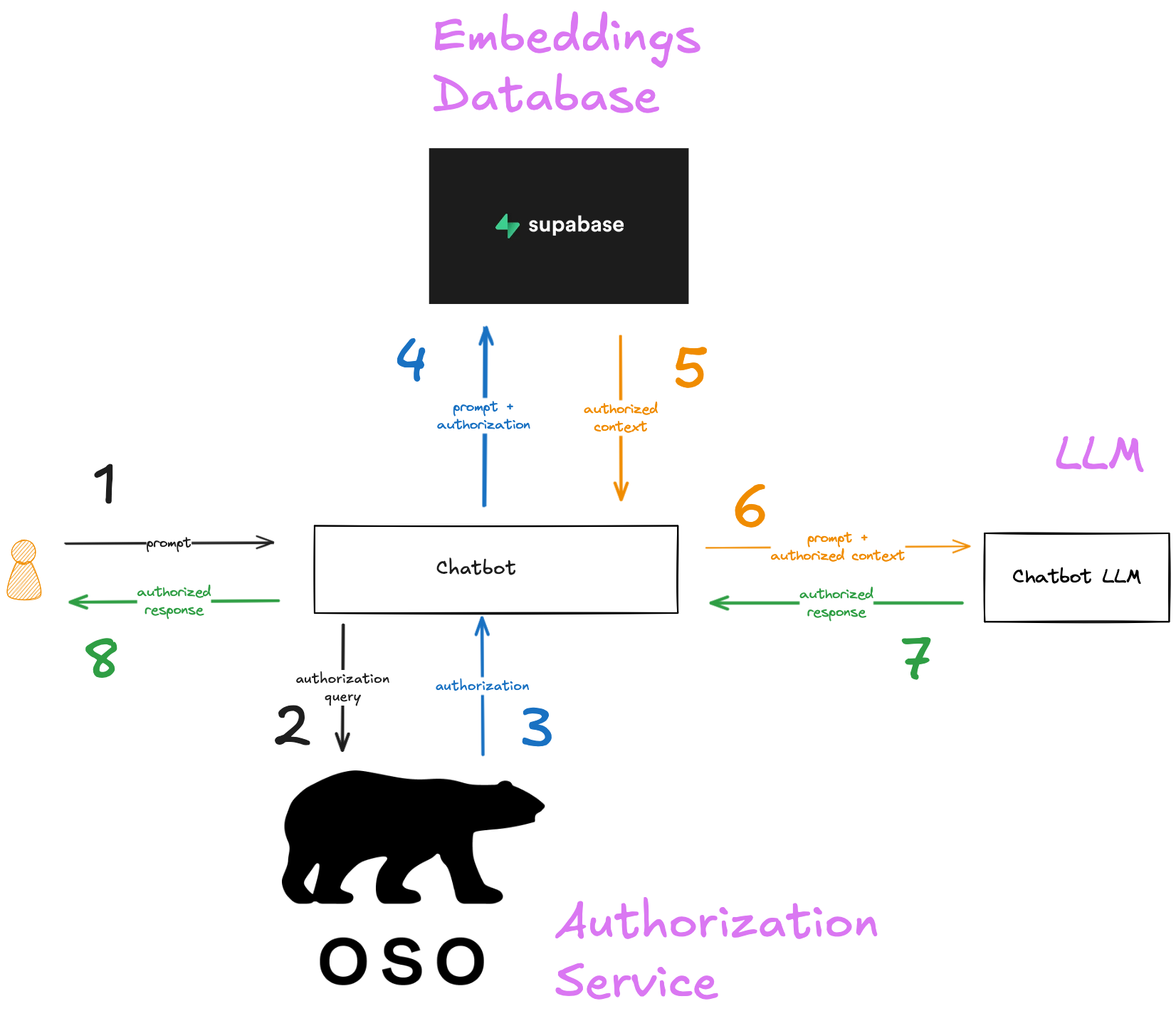

Data flows span multiple systems and steps (RAG, APIs, embeddings).

LLMs don’t enforce rules, they interpret them.

LLMs need broad access to generate useful responses, but must only act on what a specific user is allowed to see or do.

Without dynamically and tightly scoped permissions, agents will do things they shouldn’t.

Fine-Grained Permissions for AI Apps

Oso lets you define permissions in one place and enforce them everywhere—across apps, RAG pipelines, and autonomous agents:

- Enterprise search returns only what a user is allowed to see.

- RAG workflows apply access checks at retrieval.

- AI agents act on behalf of users, with tightly scoped permissions and full audit trails.

Built for AI

The permissions layer for apps, agents, AI

Agent-Aware ReBAC

Model user-to-agent relationships for impersonation, delegation, and multi-agent coordination

Retrieval Filtering

Ensure AI pipelines (search, RAG) return only the data a user is authorized to access

Policy Testing & Explainability

Trace and debug access decisions across agent actions and complex data flows

Compliance & Auditing

Maintain a central policy with full audit trails of agent activity and low-latency enforcement